Hardware Implementations of Customized Optimization Engines: Model Predictive Control Example

- Category: Circuits & Systems

- Tags: ranko sredojevic, vladimir stojanovic

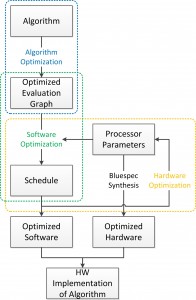

Numerical algorithms are the basis of many important technologies employed in industry and academia. Their efficient implementation is key in achieving good performance of simulation software, DSP for communication systems, multimedia, machine learning, and mission-critical real-time navigation and control. Unsurprisingly significant effort is still spent researching hardware and software acceleration of basic building blocks for numerical computation [1] [2] [3] . However, most of the previous work is based within one implementation layer of a computation system. Such layers include the algorithm to perform the computation, the software implementation, and the target hardware. Here we consider the entire abstraction layer of a system implementation, as shown in Figure 1, providing tight design-time coupling between them, with the ultimate goal of exploring numerical hardware platform tradeoffs at both limits of performance: high speed and low power.

For tight coupling of algorithm and processor physical layer constraints, our project focuses on the link between these layers: an optimizing compiler. Underneath the compiler, we use data abstraction and polymorphism in advanced HDLs to design a very flexible, extendable VLIW-style processor template. The design is fixed at the protocol level while the actual micro-architectural configuration (number of units, pipeline depth of every unit, etc.) is postponed through parameterization. Processor basic building blocks (crossbars, floating-point units, memories, etc.) are prototyped in the target technology to characterize maximum clock frequency as a function of pipeline depth for each unit. On top of the compiler, the algorithm evaluation graph is optimized through constant-folding, dead-code elimination, and similar compiler techniques. Finally, the link between the algorithm-side computational requirement and achievable performance is established through extensive compiler sweeps determining the optimal processor configuration for a given algorithm and technology tradeoffs.

We chose Model Predictive Control (MPC) of linear systems [1] [2] as a motivation and test vehicle for our design. It is an advanced control algorithm using many standard numerical blocks, e.g., as matrix-vector multiplications, matrix decompositions, linear system solvers. It has been extensively studied [1] [2] in embedded systems and hardware and software implementations.

Our results show that reductions of up to 4x in overall latency can be obtained by proper processor configuration. Furthermore, we show that the optimal latency point is not at any of the intuitive points, the minimum cycle count or the highest clock frequency, but depends on topological features of the computation graph. Currently, we are working on validating our predictions that even at ~20x clock penalty for FPGA implementations, we should expect ~8x improvement in latency from highly optimized implementations running on multi-GHz processors [1] , validating our cross-layer approach.

- J. Mattingley and S. Boyd, ” Real-time convex optimization in signal processing,” IEEE Signal Processing Magazine, vol. 27, no. 3, pp. 50-61. [↩] [↩] [↩] [↩]

- K. V. Ling, S. P. Yue, and J. M. Maciejowski, “An FPGA implementation of model predictive control,” in American Control Conference, 2006, pp. 1930-1935. [↩] [↩] [↩]

- Leung, B. et al., “An interior point optimization solver for real time inter-frame collision detection: Exploring resource-accuracy-platform tradeoffs,” in International Conference on Field Programmable Logic and Applications, 2010, pp. 113-118. [↩]